Table of Contents

- Overview

- Open API

- Event Streaming

- Business Automation

- Distributed Cache and Data Services

- Agile Integration

- System Monitoring

- Microservices Management

- Standard Message Format

- Scalability and Agility

- Summary

Overview

In my previous post, I briefly talked about event-based architecture for payment platform modernization and why the modernization is important to the industry. In this post, we will dive into details of the key capabilities to consider for the payment platform modernization. The capabilities covered in this post may not be the complete list but it definitely serves as a good start.

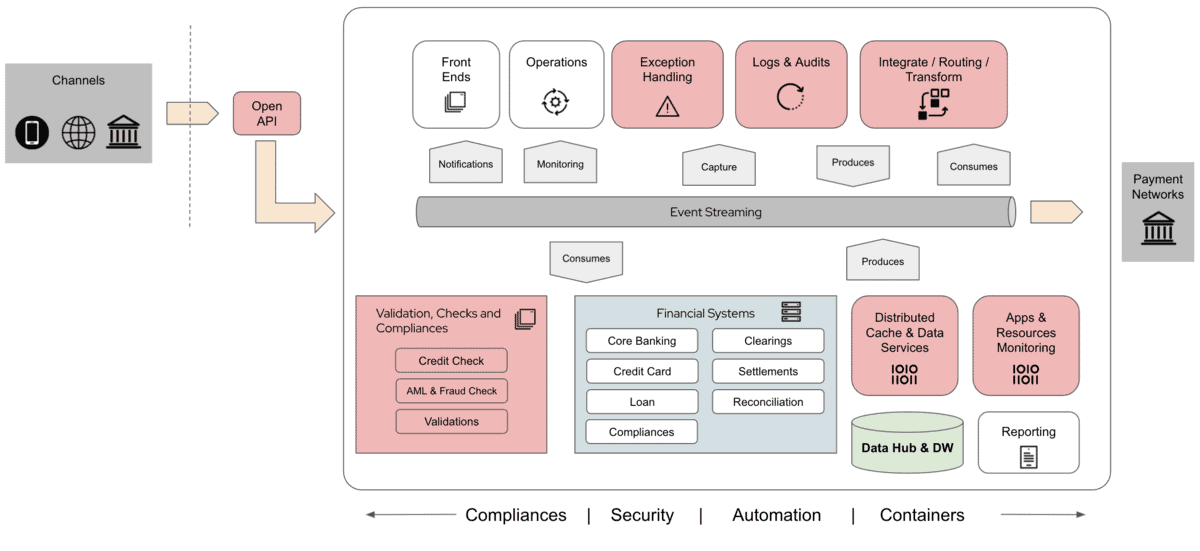

Diagram 1: Event-Based Payment Architecture

I am including the above diagram again to refresh our memory of the event-driven architecture that we covered in the previous post.

Let us dive into the details in the next sections.

Open API

Open API provides a secure channel for your customers to perform the payment transactions via your payment services, from 3rd party bankers or financial institutions. Open API is equally important for internal APIs because biggest cybersecurity treats are usually internals.

You payment services business partners will be able to sign up to your APIs, perform mock tests on the APIs, and monitor their API usage via a self-service platform.

Open API platform provides total control and management where you can easily configure and expose your APIs to the outside world. Open API enables capabilities such as being able to control the API rate limits, application plans, security policies and user managements.

In short, the Open API platform enables you to share, secure, distribute, control, and monetize your APIs on an infrastructure platform built for performance, customer control, and future growth.

You might be saying Open API is not new. You are absolutely correct.

However, the more it has became a business as usual, the more likely we will tend to overlook the Open API in our very initial assessment exercise. We need to ascertain that necessary changes or enhancements, if any, are made to our existing Open API so that the APIs can integrate well with our new event-based payment platform.

For instance, we may need to ask questions such as can the Open API integrates to the event streaming platform? What type of data format and protocols are supported?

If you wonder, Red Hat provides Open API Platform via the Red Hat Integration.

Event Streaming

Event streaming platform provides a high performance environment for client applications to consume and produce messages with durability and availability. For payment platform, it is important for event streaming platform to be able to preserve the order of the messages. This will ensure the debit and credit transactions are in proper order to maintain the payment data integrity.

In event streaming, consumers are the client applications that read the messages from the event streaming platform. The producers are those who send the messages to the event streaming platform. The messages refers to any form of data that your applications can understand.

The event streaming platform comes with durability where it provides a data store with definable duration for how long you wish to store the data for playback. Multiple applications can consume the same messages in the platform unlimited times, as long as the messages are still available in the data store.

The platform ensures data availability and consistency where your applications can resume consuming or producing the messages if the systems are experiencing downtimes.

Why do you need event streaming for payment platform modernization?

Other than high performance and data availability, the nature of how the messages are consumed and produced coupled with the message durability ensures the microservices can be implemented in such a way each microservice is independent.

There are a few benefits of doing so.

You can implement each of these application faster and deliver your business functions to the production faster. This provides the competitive advantage your opponents might not have.

Your applications only need to consume and produce just-enough data from the event-streaming platform. This can mean many things. Developers can focus on the business logic implementation. The application team will not overly design the applications. There will not be direct complex integrations between these applications. Applications can be built and deployed without affecting others.

In other words, you will have agile, scalable and easy to maintain applications landscape in your modernised payment platform.

With the high performance provided by event-streaming, your payment system can perform better under workloads. You will have happy customers and business partners when payments can be cleared in time or ahead of schedule.

Message durability and playback also allows your applications to process multiple messages from different sources in a definable time window. One of such a use case is to perform consolidation of payment audit trails from multiple different sources at different stages of processing. More detail of this use case can be found in this post.

One of the examples of this event streaming platform is Red Hat AMQ Streams which is based on Apache Kafka.

Business Automation

Business automation refers to using the workflow and business rules to automate the payment processes.

The business automation solution should allow us to implement the workflow and business rules using declarative or low-code approach.

The business automation solution must be able to scale together with your event streaming platform. It must be able to catch up the rapid incoming payment event messages to ensure SLA is met.

Another thing that we should be looking at is a standard approach to implement the payment workflow and business rules that is based on BPMN 2.0 and DMN.

What are the payment business automation use cases?

There are many.

You can implement business rules for payment validations such as credit check, AML check and fraud check.

The validation services can be excel-like decision table that can be modified from GUI (graphic user interface). The services can consume relevant payment transactions messages from the event-streaming platform. It can use this real-time data to perform the payment validations in real-time. When payment validations are done, the responses can be produced into the event-streaming platform for the next keen of processing in the payment transaction flows.

Another example is you can implement a business automation for exceptions handling. Whenever there are transaction exceptions such as system errors or payment violations, the workflow will be initiated based on the exception messages produced in the event streaming platform.

Business automation allows you to implement human tasks where authorised personals can be notified and log into the system to verify the exceptions. In most cases, due to payment being a regulated industry, you will always need to implement a check-maker workflow process for exception cases such as payment violations.

What are the benefits of using business automation?

It allows you to implement automated payment processing that can help to cut down unnecessary human intervention and indirectly reduce the payment processing time, which results in a happier customer environment.

Being able to make changes to the business workflow and business rules in a low-code approach means being able to roll out the business changes faster. This means you are more competitive in the payment industry.

In the open source world, jBPM, Drools and Kogito are among those that can provide the business automation and decision services. Red Hat carries these open source solutions in commercial product called Red Hat Business Automation.

Distributed Cache and Data Services

Distributed cache provides instant quick access to the frequently used data for your payment services. The distributed cache provides an in-memory, distributed, elastic NoSQL key-value datastore, which your applications can call to this cache platform to access frequently used data such as customer name, address, account details, etc.

For those payment microservices that are coupled with a database, data services provide a mechanism for the applications to exchange the database data among the microservices without compromising the data integrity but at the same time maintaining the atomicity of the microservices. The data services also provide mechanisms to share this data with other backend systems such as those from the existing financial systems.

The data services perform non-intrusive data captures from multiple databases to ensure data is available immediately at the payment system for consumption and processing. In this case, we are also looking at a data service that is able to integrate to the event-streaming platform to complete our payment architecture.

We can use the same data service to stream the operational data from the payment platform to your data hub and data warehouse (DW) for operational and BI reporting.

The benefits are obvious.

By using the distributed cache and data services, other than better performance contributed by the caching and instant data availability, it decouples the complexity of the underlying data structure from your applications by providing normalized and standardized common data models.

Red Hat provides Red Hat Data Grid for distributed cache management and Red Hat Debezium for the data services.

Agile Integration

You will have a number of services in the payment system. You need an integration solution to allow the services to interact with each other and exchange information via different protocols and data formats. This integration solution must be able to support both the payment services and the backend systems (modern and legacy systems).

Instead of traditional integration approach, you need a distributed and interactive integration architecture. More precisely, you need an agile integration architecture, rather than a centralized and siloed one. This is an architectural framework that aligns containerized microservices, hybrid cloud, and application programming interfaces (APIs) with the agile and DevOps practices that developers know well.

Centalized integration, usually a silo and traditional waterfall approach, prohibits rapid application delivery where changes need to takes week compared to days.

Agile integration combines integration technologies, agile delivery techniques, and cloud-native platforms to improve the speed and security of software delivery. Specifically, agile integration involves deploying integration technologies like APIs into Linux containers and extending integration roles to cross-functional teams.

Agile integration empowers the developers to be able to implement the integration services that works well along with the microservices because the integration services are microservices itself.

In short, agile integration changes enterprise integration from a problem that must be overcome, to a platform for elastic scalability across decentralized services.

System Monitoring

On top of payment transaction auditing and logging, you need to be able to monitor your payment transactions performance and to be able to support your payment business operation.

Operational reports

Today organisations need a complete 360 customer view, a structural and a tactical view of business daily operations. This is the minimum reporting capability that is required for today organisations for a successful customer insights and business operations.

Why is it so hard to have rounded operational reports?

The answer is the data. It is not just about how complete our data is but it is also crucial to have the data in the right time at the right place.

In other words, the importance of having a complete operational report is as good as the underlying data that you have in your data warehouse.

In our event-driven payment platform, we need a solution to be able to capture these ever growing and changing event messages. This is where the data service that we covered in the previous section comes in handy.

For example, you can implement an event-based change data capture (CDC) to stream and capture the business and operational data.

With the availability of complete operation data in the data warehouse, the rest of the job is a matter of how good your BI (business intelligence) is able to help you to translate this data into information for better business decisions.

You can take a look at this excellent data warehouse ETL use case using Debezium. Convoy achieves low-latency ETL pipeline to be able to provide real-time business data.

Data that used to take 10–15 minutes to import now frequently took 1–2 hours, and for some of the larger datasets you could expect latencies of 6+ hours. As you can imagine, knowing where a truck was 2 hours ago when it’s due at a facility now isn’t very useful. Several teams had already started building workarounds to pull real-time data from our production systems with varying degrees of success, and it became increasingly clear that access to low latency data was critical.

The result is reducing hours of ETL batch processing to minutes.

Source from Adrian Kreuziger

Application Resource Monitoring and Dashboard

The data center operation team needs an approach to be able to monitor the system resource usage and performance. They need to be able to be notified of resource overloaded events, and to be able to react to the situations before they gets out of control. This is especially important for event-driven platforms where we are expecting event based transactions that’s rapid, multi-sources, time sensitives and large volumes.

The performance metrics need to be part of the payment services and applications that you are implementing. It needs to be a feature that is part of the services and applications but developers should not need to worry about how this should be implemented. It needs to be a feature that is declarative implementation approach and when the moment it is deployed into the server, the metrics are available immediately for the monitoring system.

The monitoring system should be part of the payment platform that provides out of box resource and performance time series metrics in a dimensional data model. It should be at the same time provides powerful queries, precise alerting, simple operation and great visual dashboards.

Prometheus and Grafana are among the popular open source solutions for the monitoring implementation.

Microservices Management

Payment microservices can become large and complex in terms of communications and numbers. You should consider Service mesh to provide a platform and framework that’s effectively manage, secure and routes requests for microservices.

In a larger microservices environment such as the payment platform, services failures can happens at anytime. It will be impossible to identify and locate these problems at time.

Service mesh also captures every aspect of service-to-service communication as performance metrics. Over time, data made visible by the service mesh can be applied to the rules for interservice communication, resulting in more efficient and reliable service requests.

Service mesh provides distributed tracing (Jaeger) as part of the solution to allows application team to be able to monitor, trace and troubleshoot the application.

Standard Message Format

When the payment services grow along with the expansion of the business and customer base, the challenges of enforcing the message/data format become increasingly complex as well. You need a solution that can help you to ensure each of the services are comforting to the standard message schemas that your organization has been curating to it’s finest.

Service Registry is a datastore for sharing standard event schemas and API designs across API and event-driven architectures. You can use Service Registry to decouple the structure of your data from your applications, and to share and manage your data types and API descriptions at runtime using a REST interface.

Using Service Registry to decouple your data structure from your applications reduces costs by decreasing overall message size, and creates efficiencies by increasing consistent reuse of schemas and API designs across your organization.

In addition, you can configure optional rules to govern the evolution of your registry content. For example, these include rules to ensure that uploaded content is syntactically and semantically valid, or is backwards and forwards compatible with other versions. Any configured rules must pass before new versions can be uploaded to the registry, which ensures that time is not wasted on invalid or incompatible schemas or API designs.

Scalability and Agility

Above all, we need to ensure the payment systems will be able to scale horizontally and vertically without issue.

Pre-allocated server resources for scalability and high availability is in the past. This old approach creates situations where many servers are left under utilized in the data centres and wasted many organizations money and resources. Should I say this is not green as well !

We need an agile approach to ensure our applications can scale and be available with the current resources, and these resources can be shared with other applications that we have in the company. This will help to fully utilize the existing hardware investment to run multiple applications.

The applications can coexist in the same infrastructure without compromising the performance. The platform should allow us to define the server resources allocation dynamically based on the application loads such as CPU and memory utilization. This is made possible with the understanding that in a real business environment not all applications are peak at the same time.

Applications should be designed as microservices or cloud-native and be deployed onto a highly scalable platform such as Kubernetes or Red Hat OpenShift Container Platform. This allows companies to have multiple options whether to deploy the platform into a public cloud, private cloud or hybrid-cloud environment.

Summary

In this post, we covers the key capabilities to consider for payment platform modernization based on event-driven architecture. The capabilities covered may not the complete list but it definitely served as a good start.

Please let me know what do you think of the above by providing your feedback in comment section below.