Table of Contents

- Overview

- What is Kogito?

- The Overall Architecture

- Payment Exceptions – Credit Check, Validations and Fraud Check

- The Payment Exceptions Handling

- Watch the Demo in Youtube

- To Deploy the Sample Demo

- Summary

Overview

As part of the payment platform modernization, we would like to look at how can we implement an event-based payment exceptions handling where it adhere to what we have covered in the payment platform modernization at my previous post Modernise the Payment Platform with Event-Based Architecture.

We are going to go through how can we use the same event-driven architecture to realise the implementation for credit check & validation, fraud check and exceptions handling using the decision service and business automation solution provided by Kogito.

As always I am walking you through using a deployable solution that you will be able try it out yourself.

Before we jump into the detail, let’s take a quick look at what is Kogito?

What is Kogito?

Kogito is a cloud-native business automation technology for building cloud-ready business applications.

Kogito is optimized for a hybrid cloud environment and be able to adapt to your business domain and tooling needs. The core objective of Kogito helps you to mold a set of business processes and decisions services into your own domain-specific cloud-native set of services.

When you use Kogito, you are building a cloud-native application as a set of independent domain-specific services to achieve some business value. The processes and decisions that you use to describe the target behavior are executed as part of the services that you create. The resulting services are highly distributed and scalable with no centralized orchestration service, and the runtime that your service uses is optimized for what your service needs.

Kogito includes components that are based on well-known business automation KIE projects, specifically Drools, jBPM, and OptaPlanner, to offer dependable open source solutions for business rules, business processes, and constraint solving.

In short, go for Kogito if you need to implement low-code business rules (or decision rules) and business workflow (checker-maker, etc), with one additional benefit – cloud-native enabled.

The Overall Architecture

This post is the further enhancements done to the previous post in A True Atomic Microservices Implementation with Debezium to Ensure Data Consistency, which you may notice a familiar outbox pattern from the previous post.

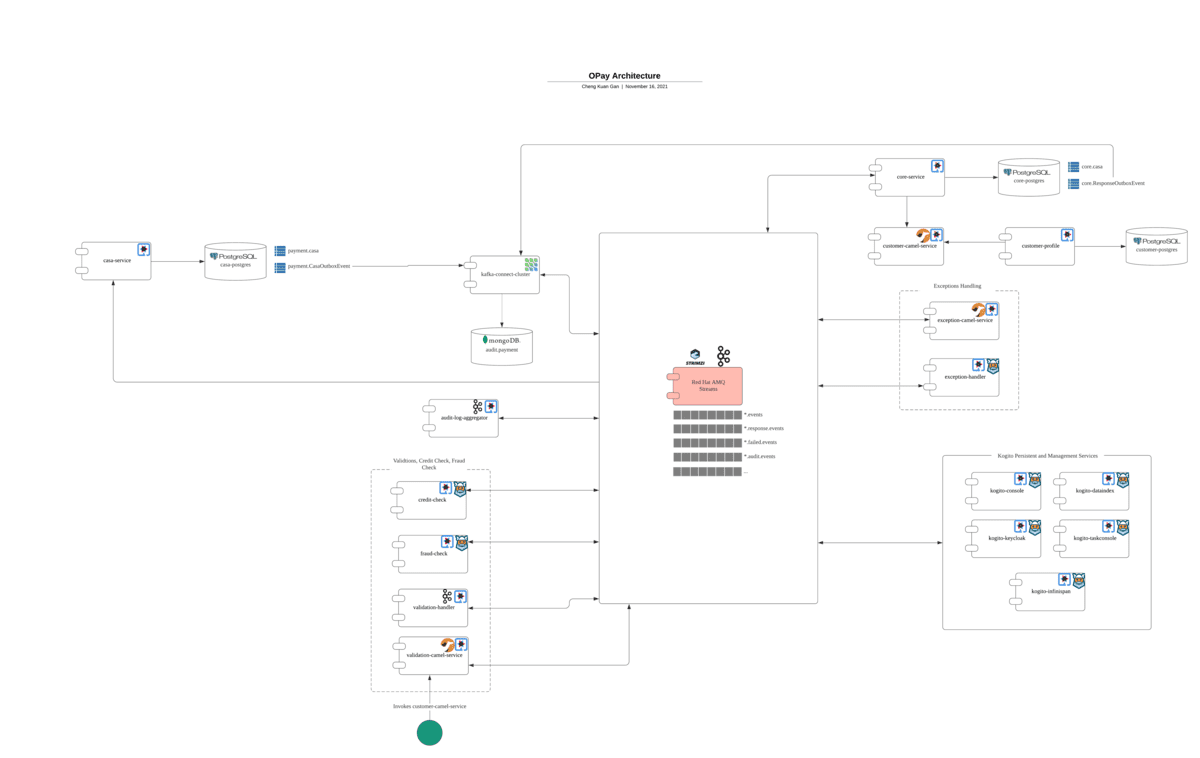

Many services have been introduced to support the realisation of payment exceptions handling demo. As a result what you are seeing at the following diagram is not only the payment exceptions handling services but also includes other services that complete the solution.

To summarize some of enhancements done to the demo in this post:

audit-log-aggregatoris enhanced to capture additional audit trails from new services such as thechecklimit-service,checkfraud-serviceandexception-handlerservices. This is an implementation of Kafka Streams API. It captures various audit information from respective Kafka topics and consolidates the audit trails into one entry per transaction so that the audit trails can be streamed into the MongoDB database using MongoDB Kafka connector.- A set of Kogito core services are introduced for Kogito process instances persistency and process management.

- A set of Kogito Quarkus based services with supporting Apache Camel services are introduced for credit check, fraud check and exceptions handling.

The payment transactions will always be resulted in multiple events at different stages of the payment processing. As shown in the center of the architecture diagram above, the Apache Kafka as a streaming platform is always the centre of all the payment events. This is holding true for our Kogito services as well.

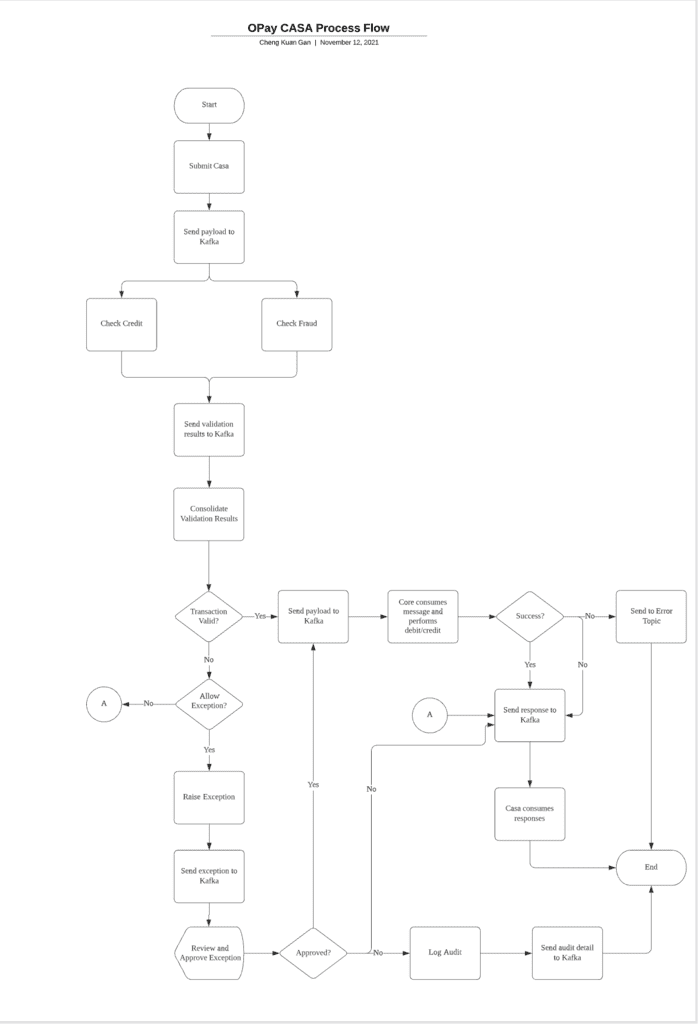

To help you to understand the process of the demo, the following diagram illustrates the complete process flow for showcasing the CASA transaction.

Payment Exceptions – Credit Check, Validations and Fraud Check

The implementation assuming we are creating the new credit check and fraud check services from scratch. However, this should not limit you from reusing your existing credit check, fraud check and AML services.

With the later case, the Kogito decisions services add values on top of your existing services by providing a single consolidated service in front of your existing services.

For both cases, the nature and capabilities offered by Kogito allows us to implement decisions services that works flawlessly with the event streaming platform, helping us to ensure the we are not sacrificing our event-driven architecture principles.

Payment Exception – Credit Check Implementation

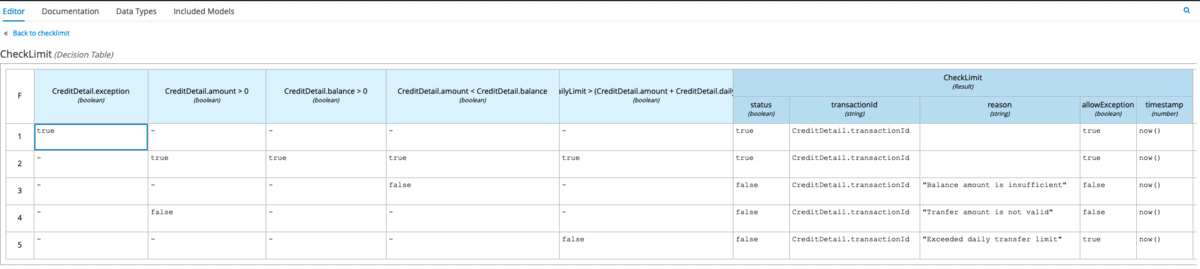

The Credit Check implementation is illustrated at the following excel alike decision table. You can access to the diagram source at the GitHub at here. In order to view the diagram in editor mode, you need to use Visual Studio Code with Kogito plugin. Another option is to use the online Kogito Business Model viewer.

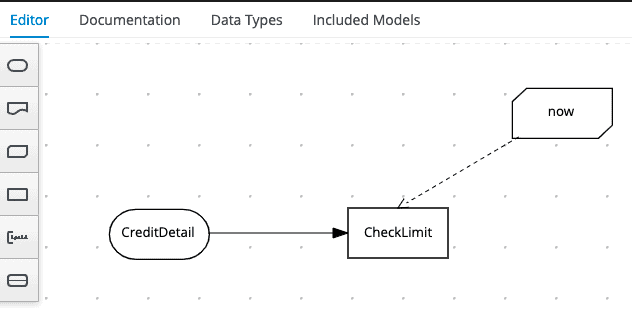

The service is named as checklimit-service as defined in the pom.xml. The decision service takes CreditDetail as an input from Kafka topic (which is defined in application.properties) as shown in the editor screen below.

There are multiple decision rules to evaluate the CreditDetail for the payment transactions. For example, we are validating the transaction amount(CreditDetail.amount), the source account balance (CreditDetail.balance) and etc. Basically we want to verify that the amount in the payment transactions are valid, source account has sufficient balance and it does not exceeding the daily transaction limit.

The matching result of the evaluations are defined in the right hand site of the screen below (those columns with darker cyan colour). The Result will be sent to Kafka topic to be consumed by the next keen of interest. The destination of the Kafka Topic is also defined in the application.properties.

The following shows part of the content in application.properties. The incoming stanza is used to configure the Kafka topic that we want to consume the message which in this case it is using smallrye-kafka connector and the topic is okay.checklimit. The similar configuration is done for the outgoing stanza configuration.

mp.messaging.incoming.kogito_incoming_stream.group.id=opay-checklimit mp.messaging.incoming.kogito_incoming_stream.connector=smallrye-kafka mp.messaging.incoming.kogito_incoming_stream.topic=opay.checklimit mp.messaging.outgoing.kogito_outgoing_stream.group.id=opay-checklimit mp.messaging.outgoing.kogito_outgoing_stream.connector=smallrye-kafka mp.messaging.outgoing.kogito_outgoing_stream.topic=opay.checklimit.response

Kogito service is expecting the input and output to be formatted in CloudEvents format. Our existing Quarkus microservices are using JSON format from the beginning and in order to facilitate this requirement, we introduce Quarkus Camel service called validation-camel-service to help to convert the JSON data format from casa.new.topic Kafka topic and at the same time enrich the existing data with additional information such as source account balance and transaction limit and etc, before it is consumed by checklimit-service.

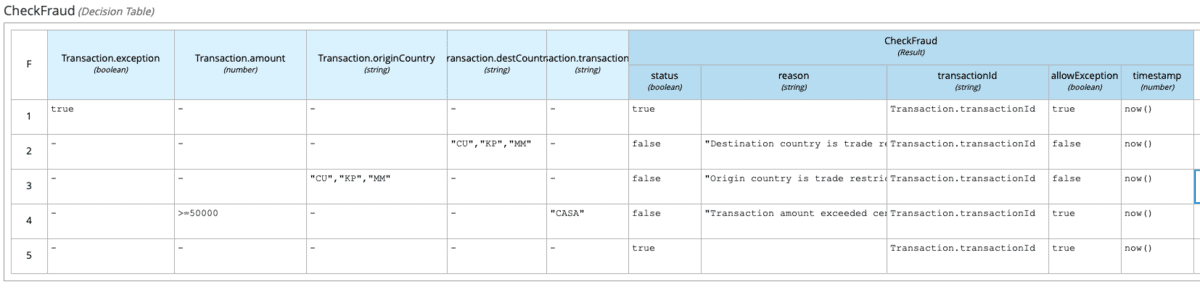

Payment Exception – Fraud Check Implementation

Fraud Check implementation approach is similar to the Credit Check. The different is Fraud Check perform validations to ensure that the payment transactions origin and destination countries are not in the prohibited list (for illustration purpose), transaction amount is not exceeded central bank regulated limit and etc.

The results from both the checklimit-service and checkfraud-service are produced to separate Kafka topics.

We introduced a Kafka streams API service called validation-handler to consume these results in CloudEvents format and performs the necessary consolidation.

Based on the consolidated check limit and check fraud status, the event data is either produced to a Kafka topic that will be consumed directly by core-service (on a success scenario) or else it will be produced to a Kafka topic that will be consumed by the exceptions handling service (failed validation scenario), which is a Kogito workflow service.

The same Apache Camel service we used earlier in the Credit Check implementation is used for the Fraud Check for data conversion and data enrichment.

At this point, we clearly see that these decisions services are truly loosely couple and event-driven. The only data exchange endpoint is always with the Apache Kafka.

Note: If you prefer to use CloudEvents format for all the services, Quarkus does support CloudEvents format for Kafka. You can refers to the details here.

The Payment Exceptions Handling

The exception handling in this post mainly circles around the credit check and fraud check validation exceptions but it should not limit you from using the same idea for other payment exceptions such as transaction runtime errors.

Payment Exceptions Handling Implementation

The exception-handler Kogito services basically consumes the consolidated Kafka event messages produced by checklimit-service and checkfraud-service.

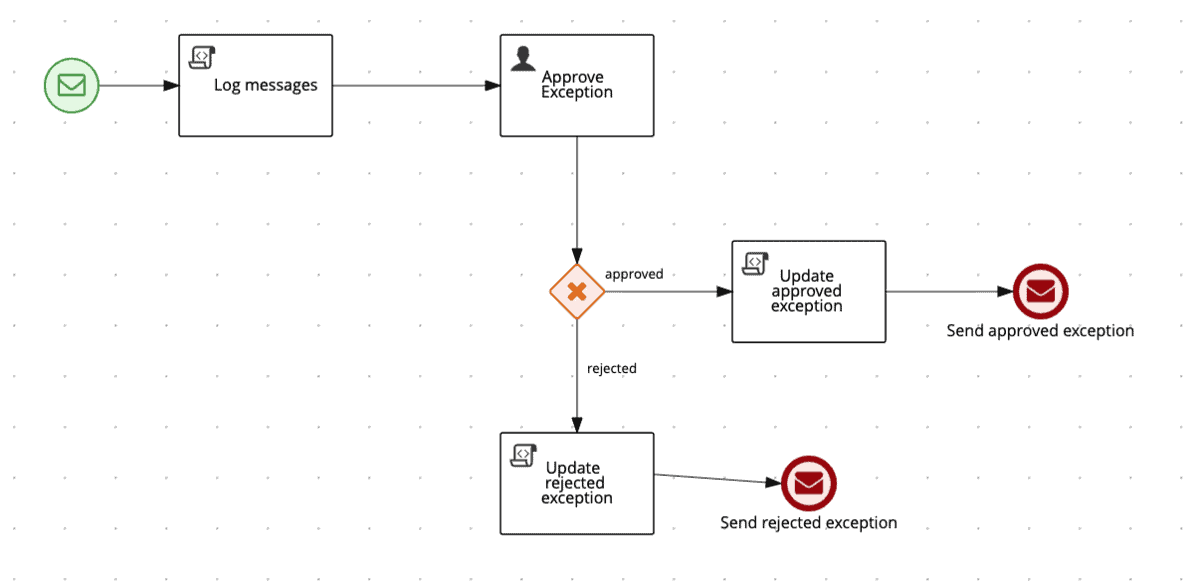

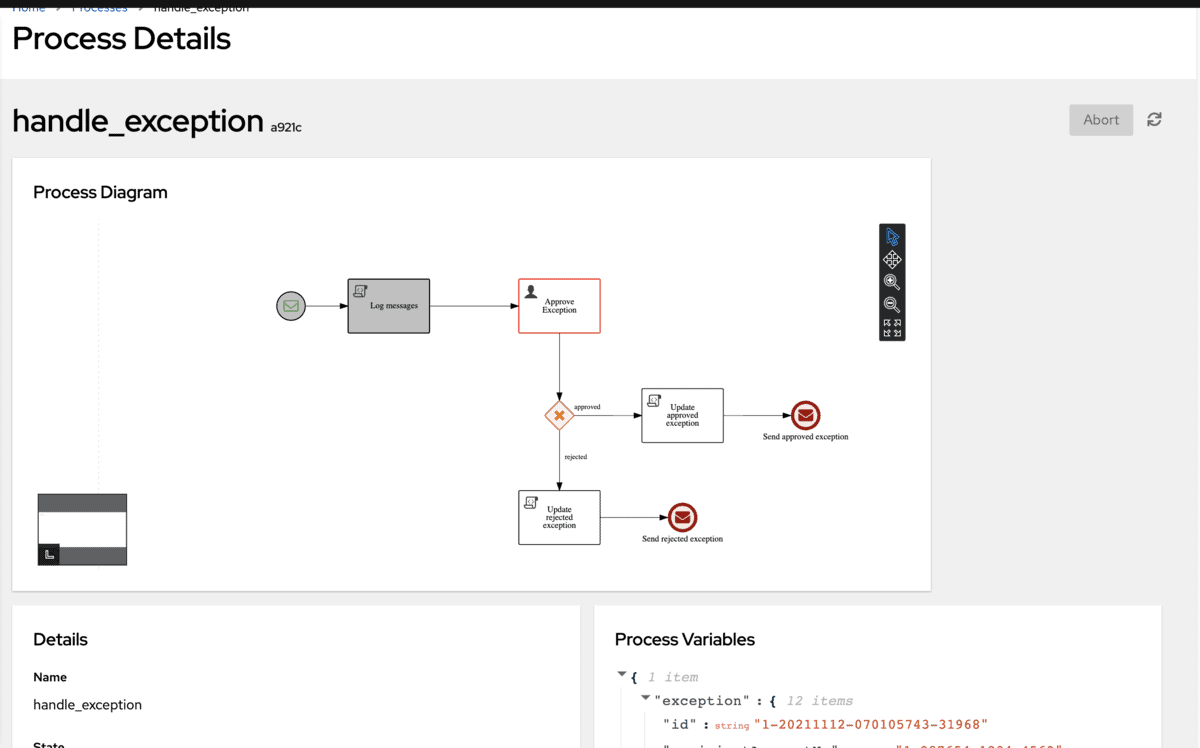

From the workflow diagram shown below, you can see that the messages are consumed from Kafka Topic (indicated by the green message node) and every single event occurrences will trigger a new process instance. The first node in this process instance is the human task (Approve Exception) where payment officers can come in to review, approve and reject the payment exceptions. Upon approved or rejected, the event messages with appropriate status and content will be sent to another Kafka Topic for next processing (indicated by the red message nodes).

The Kogito business workflow services settings are configured in the application.properties. The following shows part of the property file content. It is similar to the previous decision service sample, we configure the consuming and producing of Kafka messages via the incoming and outgoing stanza.

# Kogito process instances Kafka settings

# Kafka imcoming messages from exception events

mp.messaging.incoming.exceptions.connector=smallrye-kafka

mp.messaging.incoming.exceptions.topic=opay.exception.events

mp.messaging.incoming.exceptions.value.deserializer=org.apache.kafka.common.serialization.StringDeserializer

# Kafka outgoing messages for the exceptions response

mp.messaging.outgoing.exceptionresponse.connector=smallrye-kafka

mp.messaging.outgoing.exceptionresponse.topic=exception.response.events

mp.messaging.outgoing.exceptionresponse.value.serializer=org.apache.kafka.common.serialization.StringSerializerIn order to allow the persistency of process instances and it’s related process data, we need to configure the Kogito service to send these process instances and tasks event data into the predefined Kafka topics, which consumed by the Kogito Data Index and Infinispan. Please refer the next section (Kogito Management Console) for more detail this persistency capabilities.

To enable this process eventing, there are numbers of add-on that are required to be configured in the pom.xml and also some additional property content in the application.properties.

mp.messaging.outgoing.kogito-processinstances-events.connector=smallrye-kafka

mp.messaging.outgoing.kogito-processinstances-events.topic=kogito-processinstances-events

mp.messaging.outgoing.kogito-processinstances-events.value.serializer=org.apache.kafka.common.serialization.StringSerializer

mp.messaging.outgoing.kogito-usertaskinstances-events.connector=smallrye-kafka

mp.messaging.outgoing.kogito-usertaskinstances-events.topic=kogito-usertaskinstances-events

mp.messaging.outgoing.kogito-usertaskinstances-events.value.serializer=org.apache.kafka.common.serialization.StringSerializer

mp.messaging.outgoing.kogito-variables-events.connector=smallrye-kafka

mp.messaging.outgoing.kogito-variables-events.topic=kogito-variables-events

mp.messaging.outgoing.kogito-variables-events.value.serializer=org.apache.kafka.common.serialization.StringSerializer

# Kogito Data Index Service

%dev.kogito.service.url=http://localhost:8089

%dev.kogito.dataindex.http.url=http://localhost:8180

%dev.kogito.dataindex.ws.url=ws://localhost:8180

%prod.kogito.dataindex.http.url=http://${DATA_INDEX_HOST}

%prod.kogito.dataindex.ws.url=ws://${DATA_INDEX_HOST}

quarkus.http.cors=true

# Persistency for process data

%dev.quarkus.infinispan-client.server-list=localhost:11222

%dev.quarkus.infinispan-client.use-auth=false

%prod.quarkus.infinispan-client.server-list=${INFINISPAN_HOST}

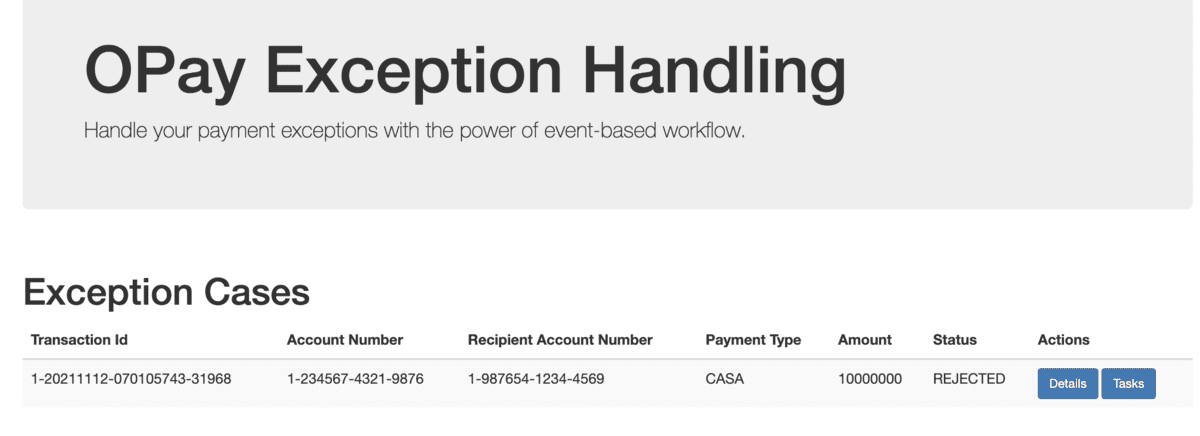

%prod.quarkus.infinispan-client.use-auth=falseOnce the exception-hanlder Kogito service is running, the OpenShift route URL created in the demo deployment will brought you to the following approval screen for the payment exceptions.

The approved exceptions will be sent back to the case.new.events to be processed again. In this case, as part of the process, we have a boolean value configured to indicate that this transaction is an approved exception and should be bypassed by the checklimit-service and checkfraud-service.

Note: The approved payment exceptions can be sent directly to the Kafka Topic that is consumed by the core-service which simplifies the process. There is no specific reason for what I did here by sending back to the casa.new.events.

The rejected exception response will not need any further processing and it will be produced to the casa.response.events Kafka Topic to be consumed by the casa-service. Based on this response, casa-service will update it’s own database service to indicate the payment is rejected with the given reason.

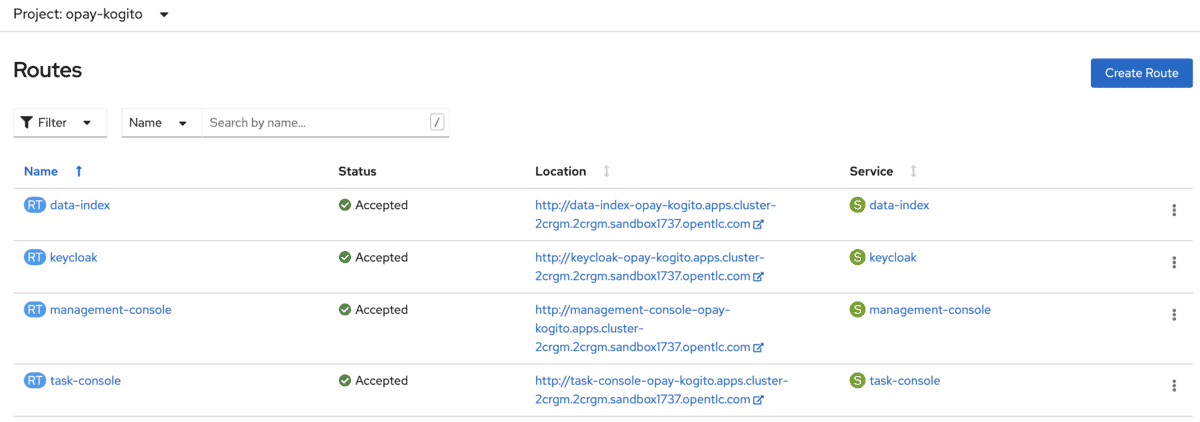

Kogito Management Console

The Kogito Management Console is a user interface for viewing the state of all available Kogito services and managing process instances. You can use the Management Console to view process, subprocess, and node instance details, abort process instances, and view domain-specific process data. Kogito Management console requires Data Index Service and add-ons to operate properly.

Kogito Data Index Service stores all Kogito events related to processes, tasks, and domain data. The Data Index Service uses Apache Kafka messaging to consume CloudEvents messages from Kogito services, and then indexes the returned data for future GraphQL queries and stores the data in the Infinispan persistence store. The Data Index Service is at the core of all Kogito search, insight, and management capabilities.

Watch the Demo in Youtube

I am sure you would want to see the actual demo in action. The following is the recorded demo posted in Youtube.

To Deploy the Sample Demo

As always, you can head over to the GitHub and follow the instructions to deploy the demo. You will need an OpenShift environment to be able to run the demo.

Summary

Once again we are seeing the light at the end of the tunnel; we are seeing how can we use the Kogito services to help us to modernise the payment platform by following the event-driven architecture. Kogito is the right fit in the scenarios where you need to implement decision services and business automation for an event driven architecture, with the fact that it is purposely built for events and cloud-native use cases.

Hello, this is a really good intro video and I enjoyed it. I have one question though, why can we not use one Kogito service containing all the .bmpn and .dmn models rather than having them as separate services ?

Hi Kirito,

You can have multiple bpmn and dmn models in one Kogito project. By having that you will probably ended with fat service. For microservice approach, you will tend to divide them into different services if possible.